DOA estimator

What I describe in this article is a project I developed during the course Sound Analysis, Synthesis And Processing at Politecnico di Milano.

The entire class of students was placed in the following context: a Uniform Linear Array (ULA) with 16 MEMS microphones distributed over 45 cm records a source at 8 kHz, moving in front of the array.

The goal was sound source localization (DOA, Direction of Arrival) by implementing a delay-and-sum beamformer suitable for wide-band sources. Since spatial filtering is intrinsically narrow-band, the adopted processing separates the spectrum into frequency bands (via STFT) and proceeds frame by frame, in order to track the sound content over time and reconstruct its angle of arrival consistently.

The implementation process

Development is bottom-up: the fundamental blocks are validated first, and only then integrated into the system.

The class and function usage diagram that summarizes the operational flow is the following:

Below I will describe all modules one by one, treating them in order of implementation and testing.

- AudioData: this is the data entry point. It loads multichannel audio from file, stores it, and normalizes it.

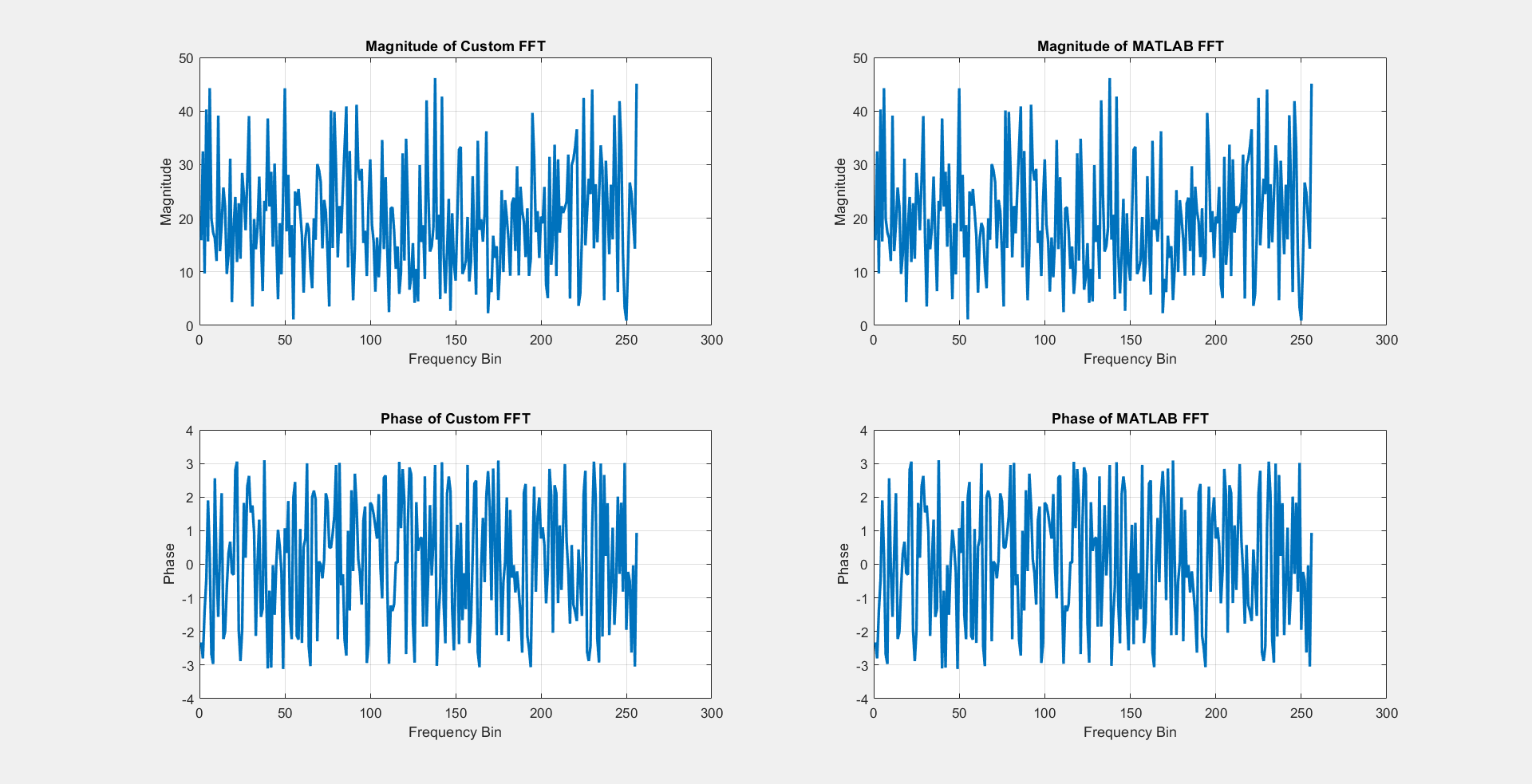

- CustomFFT: a recursive FFT for power-of-two lengths, based on Cooley–Tukey. The output is compared against MATLAB’s fft, to ensure numerical correctness.

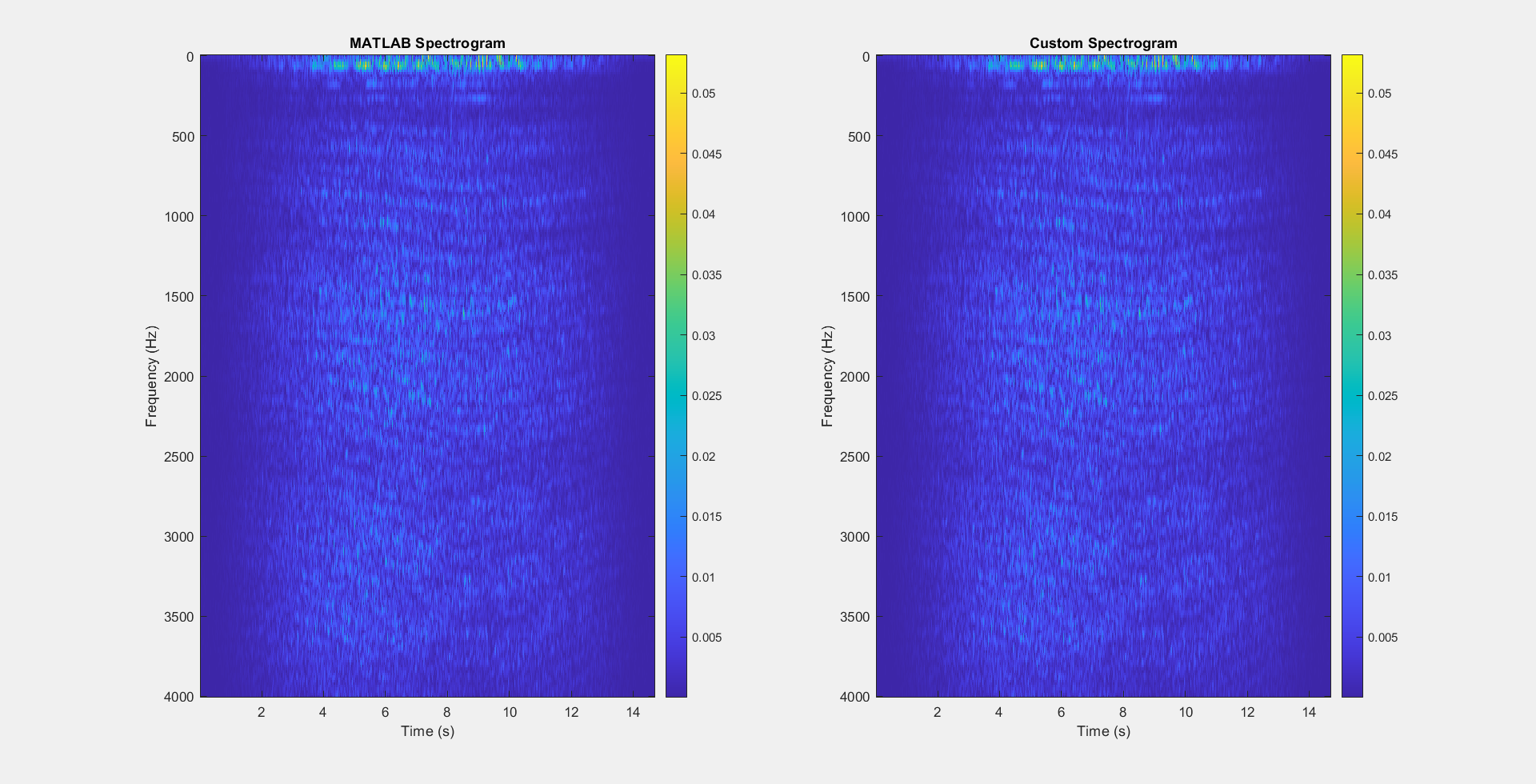

- STFTProcessor: computes the Short-Time Fourier Transform on a single channel. The function takes as input the signal, fs, window lengths, overlap, and nfft. The output is the STFT matrix ‘S’ with frequency axis f and time axis t. Validation is performed against MATLAB’s spectrogram.

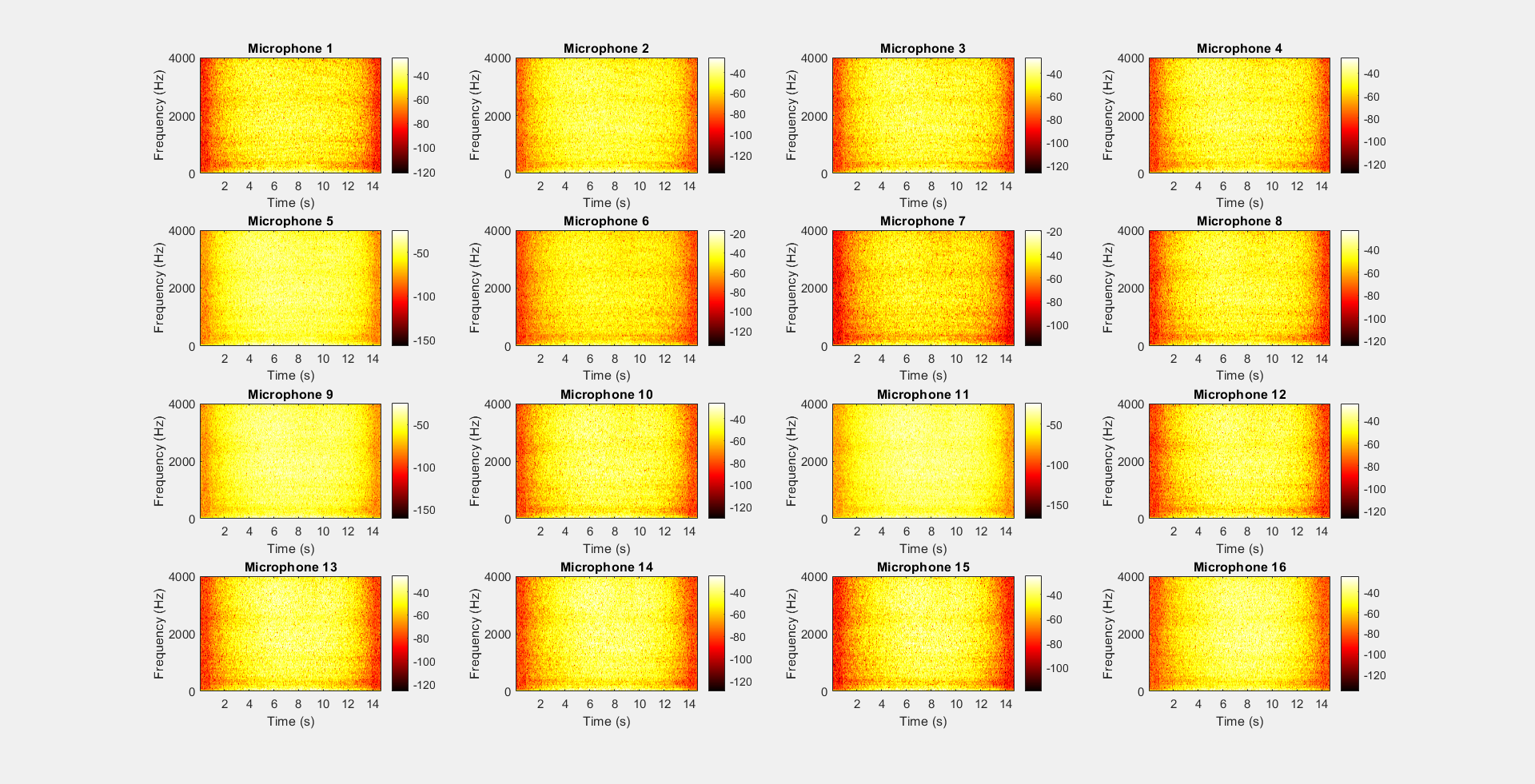

- AllChannelSTFT: to move to multichannel processing, AllChannelSTFT applies the same STFT scheme to all microphones in the array, treating channels independently but with consistent parameters. Tests check correctness and output alignment for each channel.

-

GetCovMatrix: starting from the multichannel STFTs, GetCovMatrix builds the spatial covariance matrix band by band. Verification is based on checking the Hermitian property of the matrix.

-

GetSteeringVector: computes the steering vector for a given angle and frequency, taking into account spacing d, speed of sound c, and the number of microphones. In practice, it assigns to each array element the expected phase with respect to the wavefront.

-

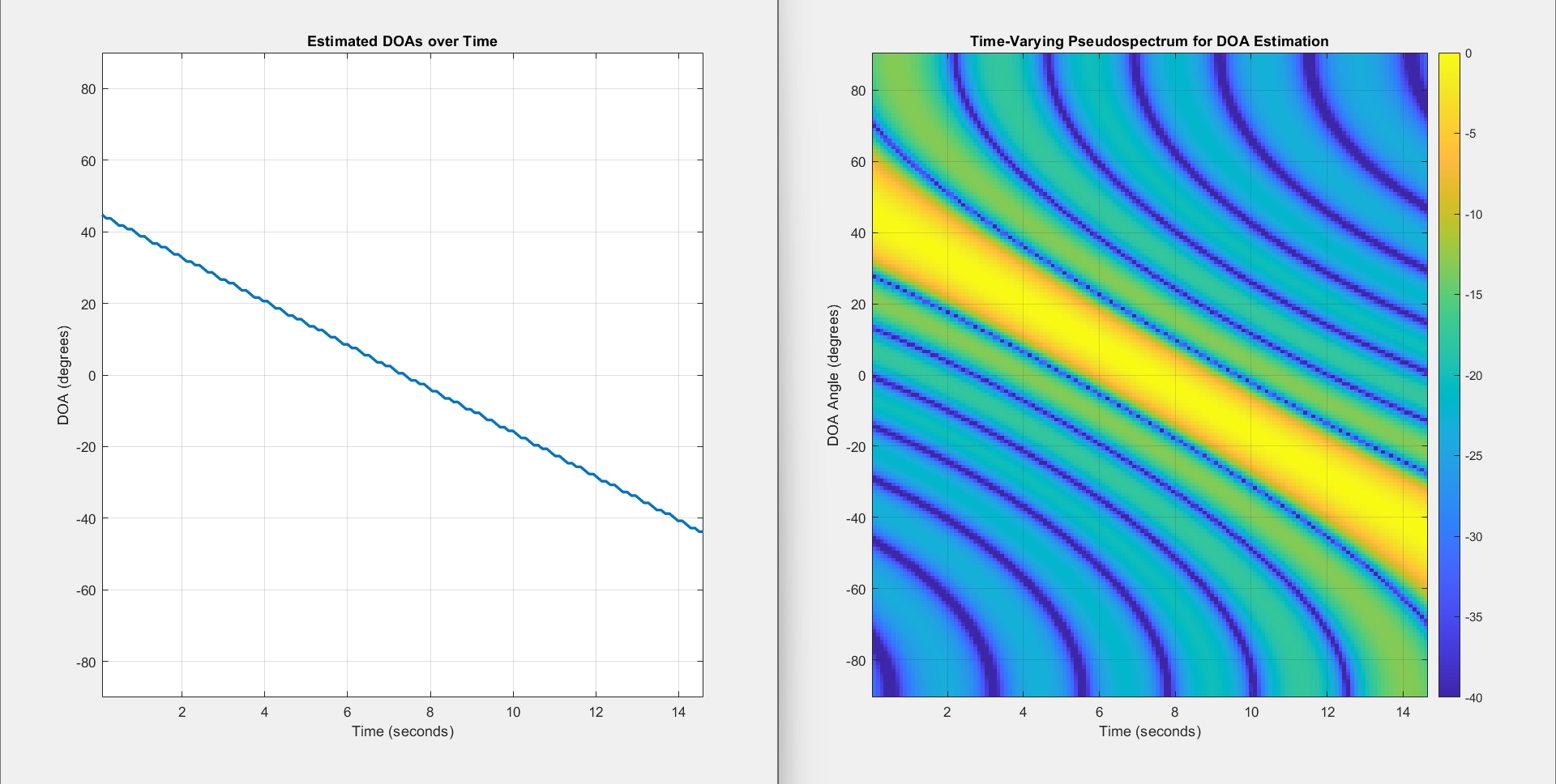

Beamform: implements the core of the system: delay-and-sum. For each band and for each frame, it combines the covariance with steering vectors over the angular interval of interest, generating a power map versus angle and time (p_theta_time). The quality of this map directly affects the final estimates.

-

DOAEstimator: reads p_theta_time and returns the direction-of-arrival estimates (doa_estimates) by identifying, frame by frame, the angles with maximum energy.

Everything described above is orchestrated by the Main class, which loads and normalizes data (AudioData), computes STFTs on all channels (AllChannelSTFT), builds covariance matrices (GetCovMatrix), applies beamforming over the angle range (Beamform), and extracts DOA estimates (DOAEstimator).

Visualization

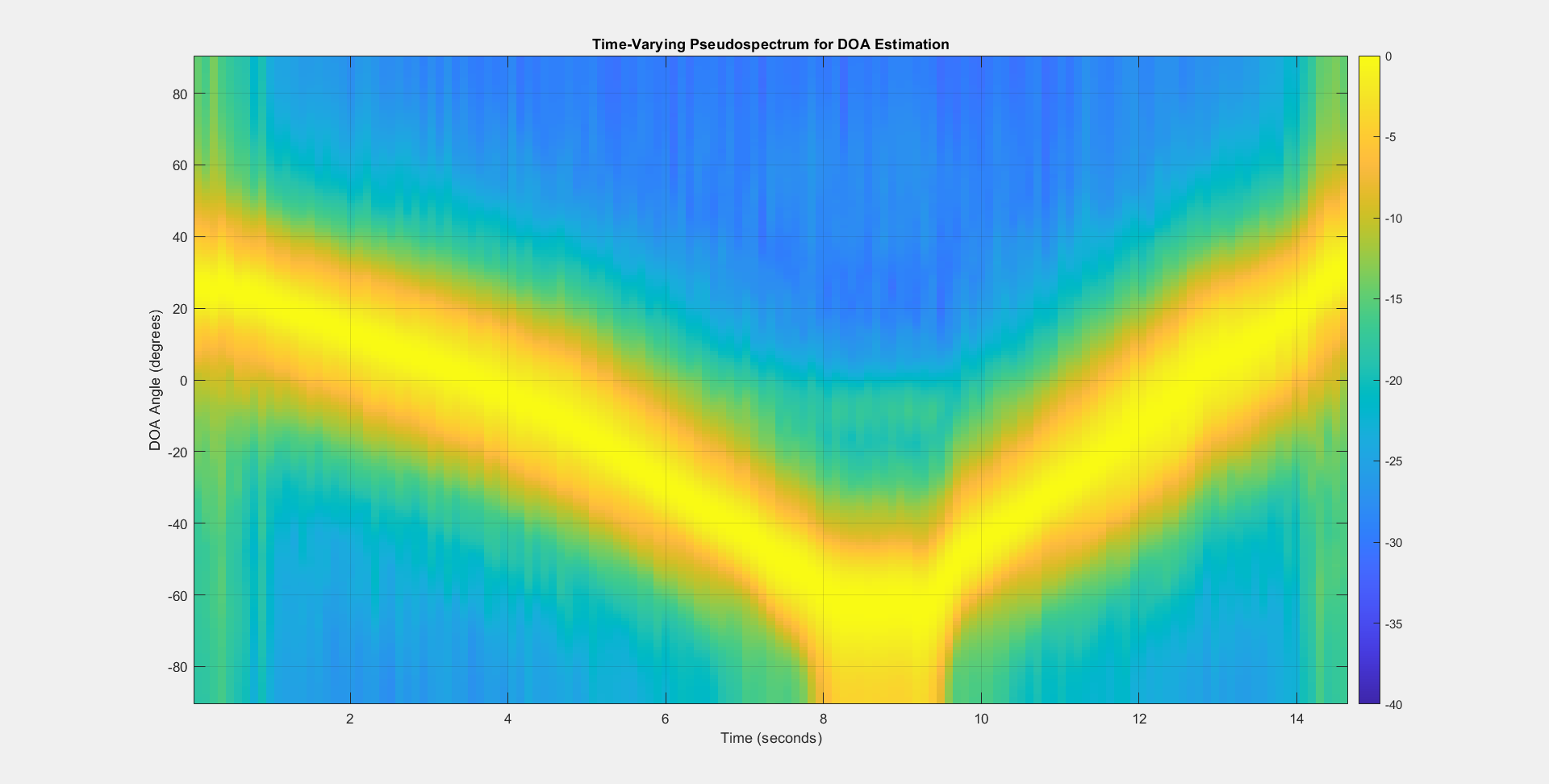

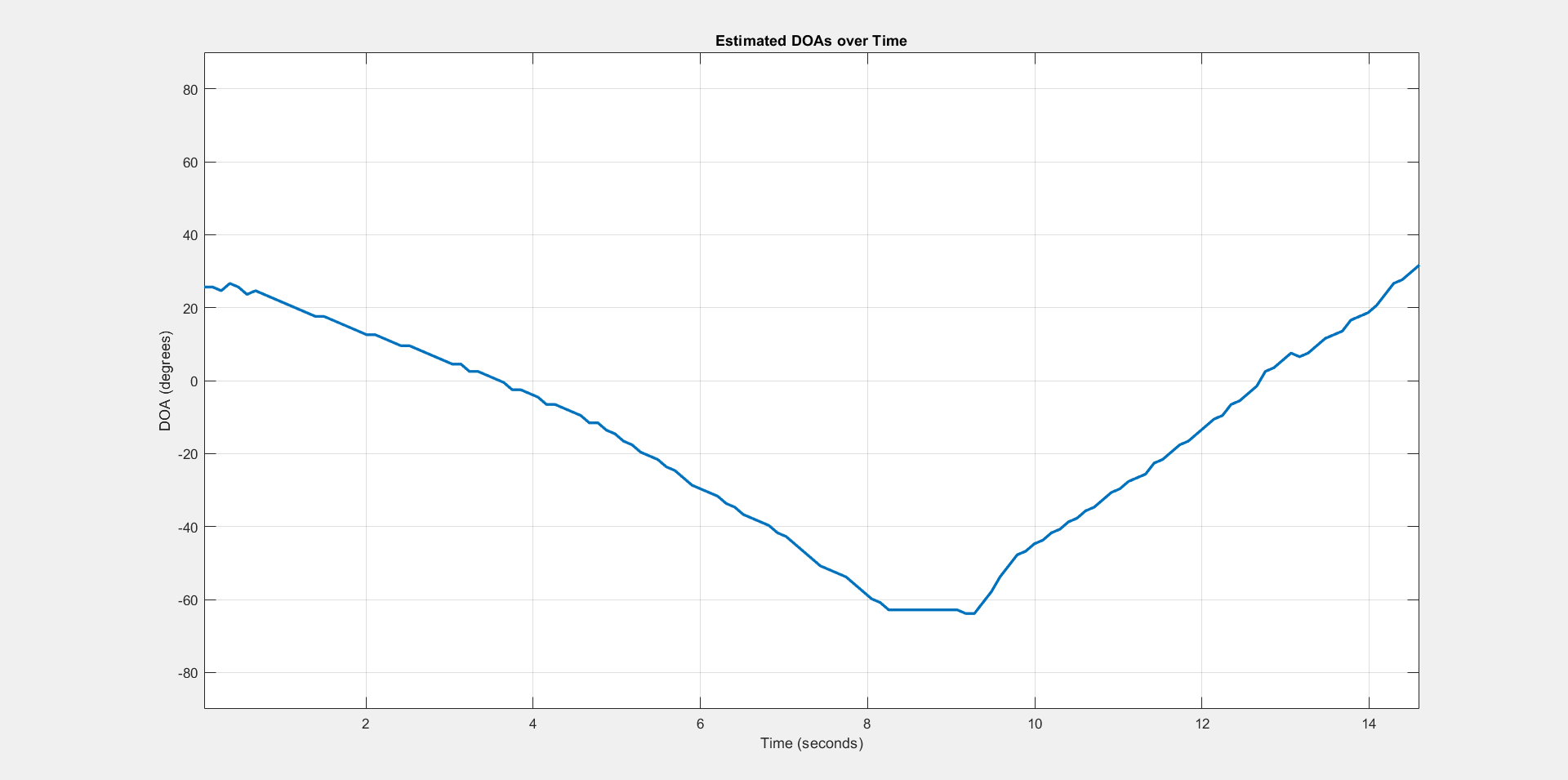

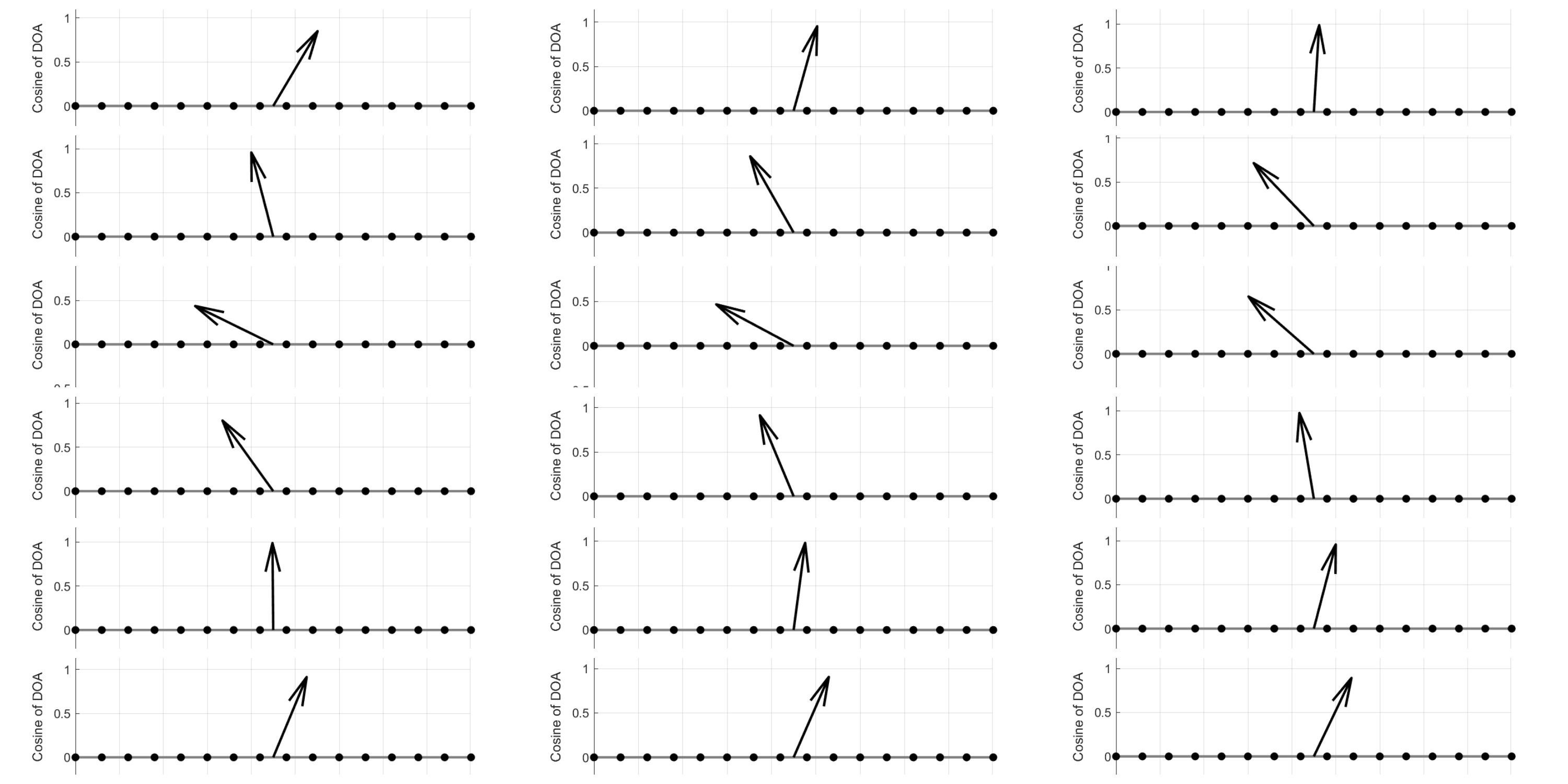

To interpret the results I implemented the classes visualizePseudospectrum, which shows the pseudospectrum (power as a function of angle, over time), and visualizeDOAEstimates, which plots the trend of the angular estimates. In addition, the classes getSingleFrame, framesGenerator, and videoGenerator create a sequence of frames and compose it into a video, useful to view the temporal evolution.

The visual result is shown in the figures below:

Pseudospectrum: highlights the power distribution with respect to the angle of arrival (and time). Peaks indicate the predominant directions, therefore the possible source directions.

DOA over time: DOA estimates reveal the frame-by-frame trajectory of the moving source.

The analysis and tests confirm the effectiveness of the implemented beamforming. You can find the code on GItHub.